Dimensionality Matrix Notation

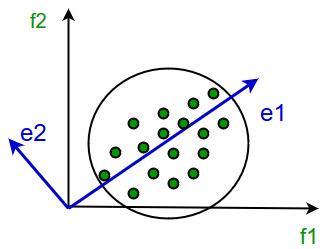

The eigenvectors define the new space Project two red points on blue e that preserves greatest variability range of variance instead of green e. Theith element of ais written asai orai.

What S The Best Way To Write Matrix Together With Its Dimensions On Top And Left Tex Latex Stack Exchange

W X y i 1 ix i.

Dimensionality matrix notation. The number preceding the boldface irreducible representation dimensionality 2 s1 label indicates multiplicity of that representation in the representation reduction. With one column its a column vector so it has one dimension. X or A0 Rows.

Three-Dimensional Vector and Matrix Notation r i global position of the origin of reference frame attached to body i P r i global position of point P attached to bodyi. In the remainder of the paper we adopt the notation presented above and we assume the dataset X is zero-mean. The low-dimensional counterpart of x i.

This is the opposite correspondence of rows and columns to features and training examples. Nonnegative Matrix Factorization for Semi-supervised Dimensionality Reduction 5 representation in eq. Then ˆ D 1 D 1 with ˆ ˆij is the cross-correlation matrix of order.

If m is 3 its three-dimensional if its 1 its unidimensional. In science sometimes we have to talk about very large and very small numbers. N_x original input features Columns.

Z y x r global position of point B attached to body 4 r i global velocity of. For brevity of notation we suppress the flrst subscript n in some situations where the dependence on n is self-evident. ˆij ij p ii0 p jj0 Covyityjt i j ˆij is the correlation coefficient between yit and yjt.

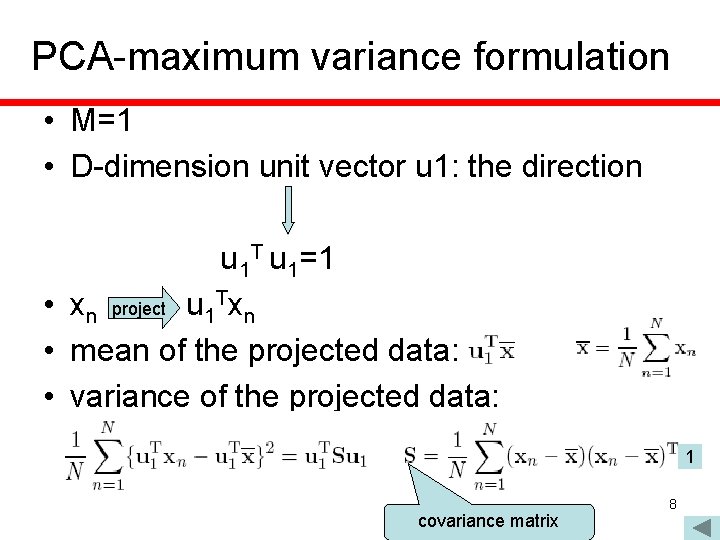

We need a special way to do this called scientific notation. A matrix with m rows and n columns is called an m n matrix or m -by- n matrix while m and n are called its dimensions. Principal Component Analysis 6.

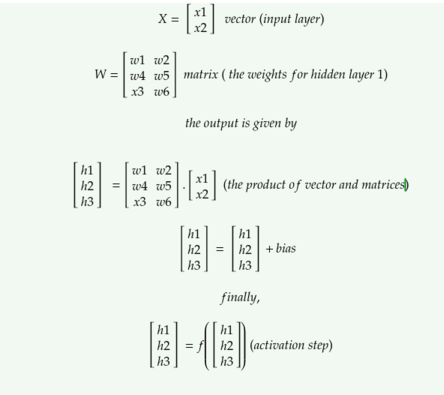

Matrix notation for a layer We have In matrix notation where f is applied element-wise. Vectors are denoted by boldface lowercase letters such asab. That doesnt make it a 4d or 5d array just a nested data structure where the dimensionality concept breaks down.

M_ij quad leftrightarrowquad textttMij quadquad mathrmfor i0dotsm-1 j0dotsn-1 Figure PageIndex1. Ii0 the diagonal matrix of the standard deviations of yis. Wedenote the constant vector of one as1with its dimension implied by thecontext.

7 where the sums in eq. For example matrices are represented by 2D arrays and the ijth component of an mtimes n matrix is written in Python notation as follows. I is the ith row of the D-dimensional data matrix X.

In particular we define. The dimensionality is the number of subscripts you can use to select elements. We also need a wa.

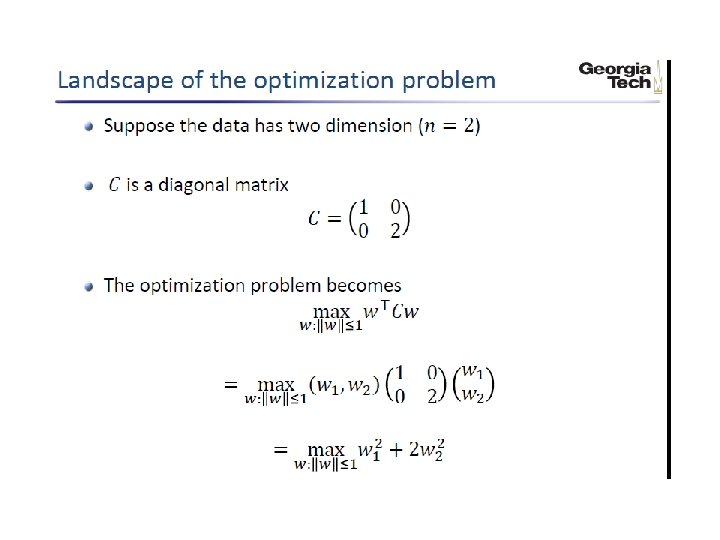

What criteria should we optimize for when learning U. Combining n doublets your spin 12s nets you 2 n n 2 k 0 n 1 2k n 1 n 1 k n 1 2k where is the integer floor function. 6 suggests a simple decomposition of the weight vector w into nonnegative components.

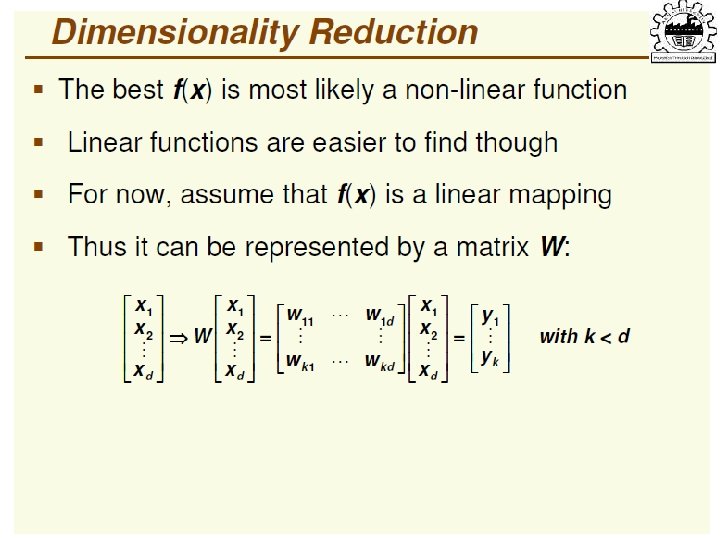

Let n covy. Principal Component Analysis PCA is an algorithm for doing this Machine Learning CS771A Linear Dimensionality Reduction. Your example 0 000 is still a 2d array albeit containing a 3d array as its second element.

For example the matrix A above is a 3 2 matrix. With this notation the gure on previous slide can be re-drawn as below How do we learn the best projection matrix U. To indicate their dimensions we use notation likea2n to representan-dimensional vector.

Example B 4 B 4 B 4 B 4. Figure 1 shows a. N_x times m Summary.

If your data is n m matrix where n is the number of samples and m the number of features its m -dimensional data. The matrix X is comprised of n rows each of which represent a different input feature and m columns each of which represent a different training example. ZWxb afz fz 1z 2z 3fz 1fz 2fz 3 37 W 12 b 3.

X f1 fn. Memory model of a 2D array. The basis vectors are arbitrary and all kinds of sets can satisfy the basis.

The dimensionality of V is 4 because it has four independant components that point in different directions and V can be represented by a set of four basis vectors. Find the eigenvectors of the covariance matrix 2. We introduce here some notation used throughout the paper.

But you get what I mean. A 1 a 2 a 3 a 1 fW 11 x 1 W 12 x 2 W 13 x 3 b 1 a 2 fW 21 x 1 W 22 x 2 W 23 x 3 b 2 etc. Let f 1y fnyn be n independent and identically distributed iid samples of fy.

M training examples Dimensions. Dimensionality reduction is the transformation of high-dimensional data into a meaningful representa-. Y y1 y and E 1 n.

7 range over positively and negatively labeled examples respectively. W X y i1 ix i. Matrices with a single row are called row vectors and those with a single column are called column vectors.

A 1 0 0 0 B 0 1 0 0 C 0 0 1 0 D 0 0 0 1. Original dimensionality First components oecome m new almenstons change coordinates of every data point to these dimensions 156 531.

Linear Algebra Explained In The Context Of Deep Learning By Laxman Vijay Towards Data Science

Matrix Algebra Sage Research Methods

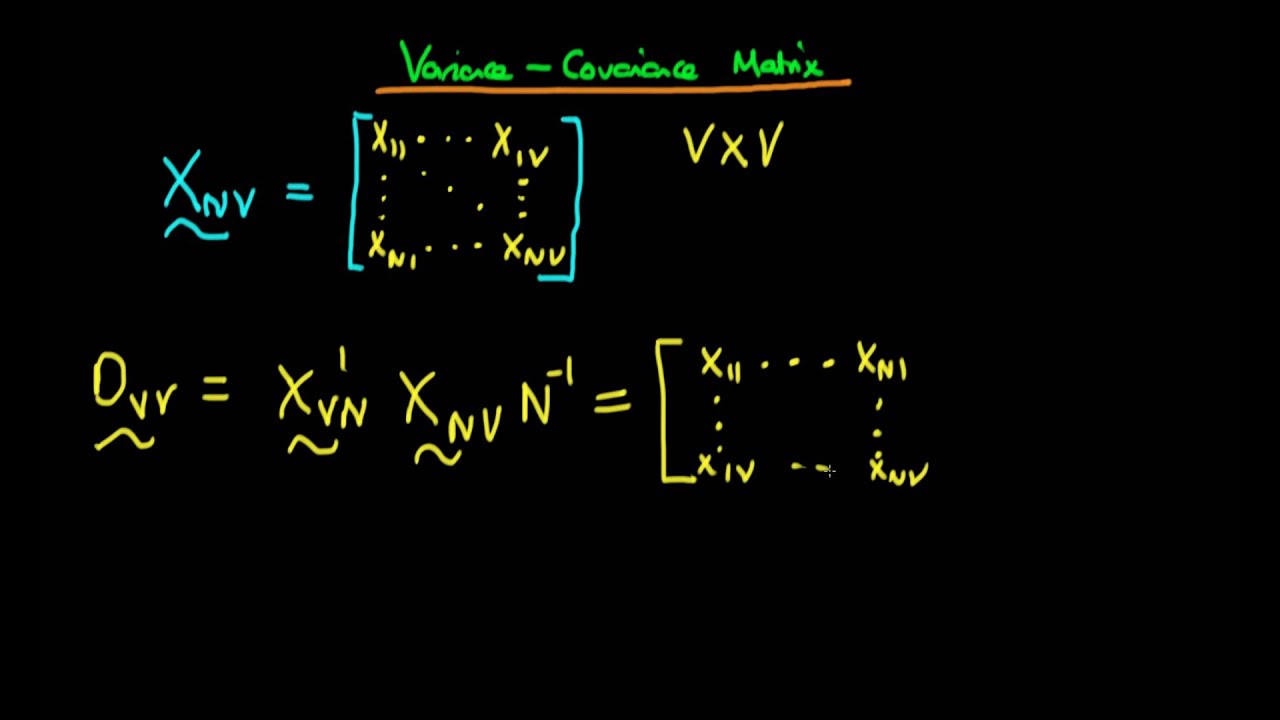

Variance Covariance Matrix Using Matrix Notation Of Factor Analysis Youtube

Curse Of Dimensionality Dimensionality Reduction Why Reduce Dimensionality

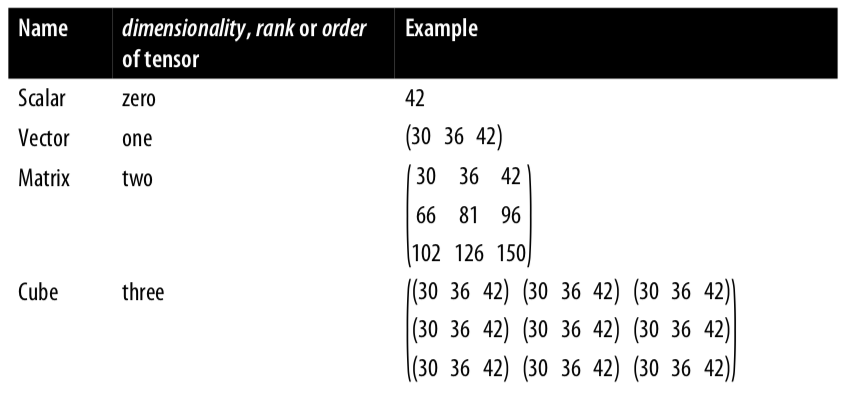

Confused About Tensors Dimensions Ranks Orders Matrices And Vectors By Romeo Kienzler Center For Open Source Data And Ai Technologies Medium

Hat Y X T Hat Beta Matrix Dimension For Linear Regression Coefficients Beta Mathematics Stack Exchange

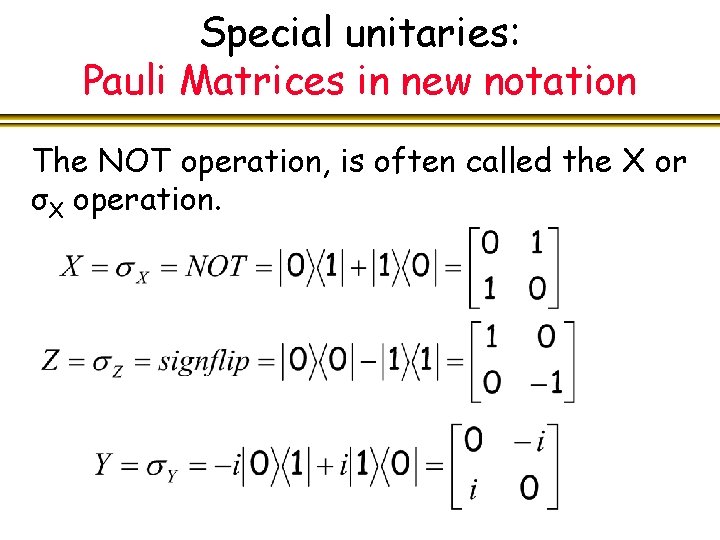

Dirac Notation And Spectral Decomposition Michele Mosca Review

Deep Learning Deep Guide For All Your Matrix Dimensions And Calculations By Skrew Everything From The Scratch Medium

Curse Of Dimensionality Dimensionality Reduction Why Reduce Dimensionality

Writing Dimension Of Matrix On Its Outside Corner Tex Latex Stack Exchange

How To Write Matrices With Dimensions In Latex Tex Latex Stack Exchange

Tutorial Kernel Dimensionality Reduction And Pca Kaggle

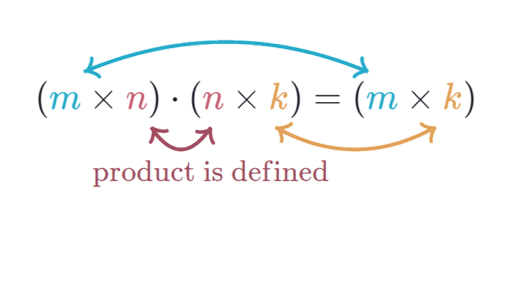

Matrix Multiplication Dimensions Article Khan Academy

Introduction To Dimensionality Reduction Geeksforgeeks

Continuous Latent Variables Bishop Xue Tian Continuous Latent

Confusion About Backpropagation Matrix Dimensions Cross Validated