Pytorch Matrix Multiply Vector

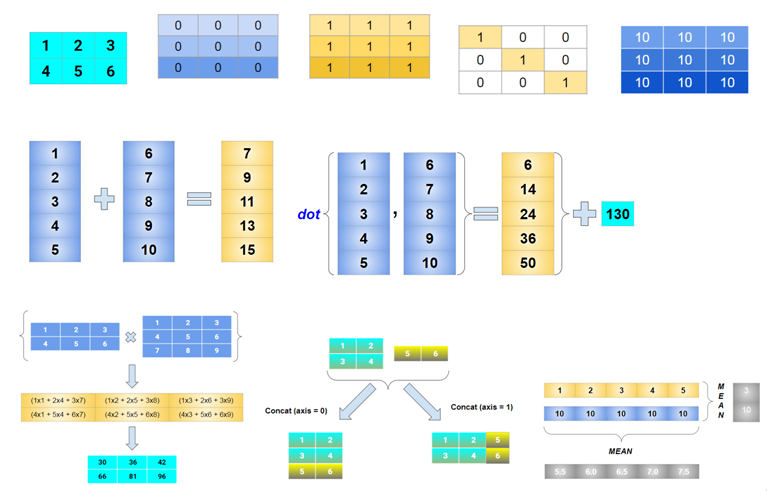

The entry XYij is obtained by multiplying row I of X by column j of Y which is done by multiplying corresponding entries together and then adding the results. Def f x1 x2.

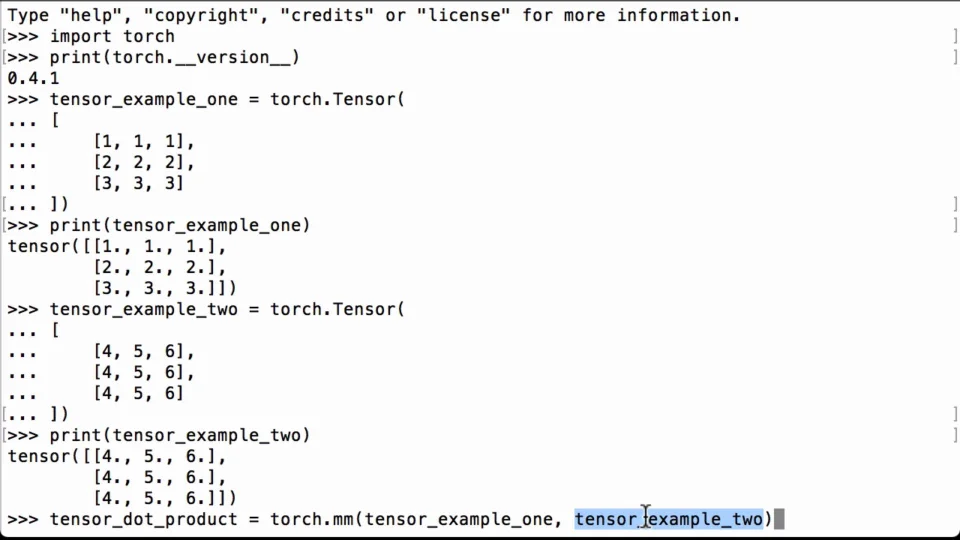

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

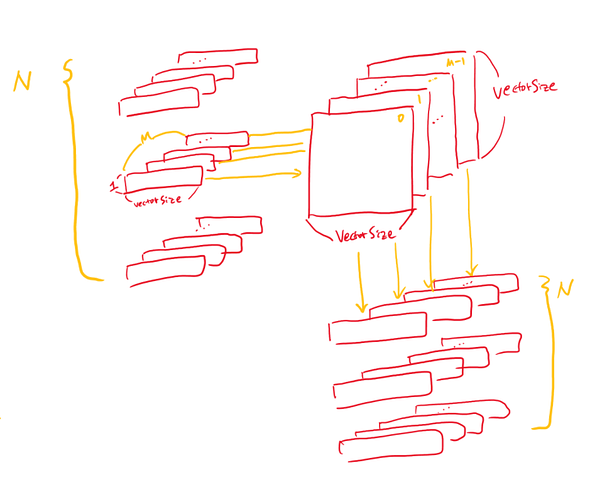

Batch matrix multiplication Batch Matrix x Matrix Size 10x3x5 batch1 torch.

Pytorch matrix multiply vector. However applications can still compute this using the matrix relation D. Learn about PyTorchs features and capabilities. One of the ways to easily compute the product of two matrices is to use methods provided by PyTorch.

By popular demand the function torchmatmul performs matrix multiplications if both arguments are 2D and computes their dot product if both arguments are 1D. B torchrand 41 then I will have a column vector and matrix multiplication with mm will work as expected. If you think about how matrix multiplication works multiply and then sum youll realize that each dotij now stores the dot product of Ei and Ej.

In PyTorch unlike numpy 1D Tensors are not interchangeable with 1xN or Nx1 tensors. First we matrix multiply E with its transpose. Tensor4 0 5 0 Backpropagation with tensors in Python using PyTorch.

Tmp xbmmF_x_t batch_size x B x N. Matrix multiplication between a vector-wise sparse matrix A and a dense matrix B. Bmm batch1 batch2 Batch Matrix Matrix x Matrix Performs a batch matrix-matrix product 3x4 5x3x4 X 5x4x2 - 5x3x2 M torch.

After the matrix multiply the prepended dimension is removed. Now if we derive this by hand using the chain rule and the definition of the derivatives we obtain the following set of identities that we can directly plug into the Jacobian matrix of. Numpys npdot in contrast is more flexible.

Multiply tensor by scalar pytorch PyTorch - multiplying tensor with scalar results in zero vector As written in the comment when using 040 get the same results as with numpy. Their current implementation is an order of magnitude slower than the dense one. Currently PyTorch does not support matrix multiplication with the layout signature Mstrided Msparse_coo.

This is a huge improvement on PyTorch sparse matrices. While ready to be reviewed the architecture and naming scheme is. Join the PyTorch developer community to contribute learn and get your questions answered.

It computes the inner product for 1D arrays and performs matrix multiplication for 2D arrays. Randn 3 2 batch1 torch. Now multiply by F_Y to give the write patch of shape batch_size x N x N times.

Find resources and get questions answered. Models Beta Discover publish and reuse pre-trained models. This ones vector is exactly the argument that we pass to the Backward function to compute the gradient and this expression is called the Jacobian-vector product.

If the first argument is 2-dimensional and the second argument is 1-dimensional the matrix-vector product is returned. For matrix multiplication in PyTorch use torchmm. We can easily show that we can obtain the gradient by multiplying the full Jacobian Matrix by a vector of ones as follows.

If the first argument is 1-dimensional and the second argument is 2-dimensional a 1 is prepended to its dimension for the purpose of the matrix multiply. A x1 x2 y1 log a y2 sin x2 return y1 y2 def g y1 y2. This is an elementwise multiplication simply done by using the asterisk operator.

Vector operations are of different types such as mathematical operation dot product and linspace. PyTorch-Geometric PyG Fey. The matrix multiplication is an integral part of scientific computing.

Return y1 y2. Randn 10 4 5 r torch. This results in a num_embeddings num_embeddings matrix dot.

Randn 5 4 2 r torch. Vectors are a one-dimensional tensor which is used to manipulate the data. DL_over_dy torchtensor4-159 ybackwarddL_over_dy xgrad.

It becomes complicated when the size of the matrix is huge. Randn 5 3 4 batch2 torch. Addbmm M.

PyTorch is an optimized tensor library majorly used for Deep Learning applications using GPUs and CPUs. If X and Y are matrix and X has dimensions mn and Y have dimensions np then the product of X and Y has dimensions mp. Do a batch multiplication of and by invoking the bmm method.

B torchrand 4 with. A place to discuss PyTorch code issues install research. In that way we will automatically multiply this local Jacobian matrix with the upstream gradient and get the downstream gradient vector as a result.

The GCS format is special in that it drops down to the usual CSR format for 2D tensors and supports multi-dimensional data using the same data structures used by CSR but with a slightly augmented indexing scheme. Tmp F_ybmmtmp Multiply this by to get the glimpse vector. Introduction This PR introduces the GCS format for efficient storage and operations on multi-dimensional tensors.

Tensor -00000 -18421 -36841 -55262 -73683. Then we compute the magnitude of each embedding vector. If both arguments are at least 1-dimensional and at least one argument is N-dimensional where N 2 then a batched matrix multiply.

W gamma tmp. Randn 10 3 4 batch2 torch. This article covers how to perform matrix multiplication using PyTorch.

In this article we are going to discuss vector operations in PyTorch.

Pin On Algoritmos Programacion Inteligencia Computacional

How To Perform Basic Matrix Operations With Pytorch Tensor Dev Community

Linear Algebra For Deep Learning Introduction By Shrey Khanna Medium

Batch Matrix Vector Multiplication Without For Loop Pytorch Forums

Pytorch Batch Matrix Operation Pytorch Forums

Visual Representation Of Matrix And Vector Operations And Implementation In Numpy Torch And Tensor Towards Ai The Best Of Tech Science And Engineering

Batch Matrix Vector Multiplication Bmv Issue 1828 Pytorch Pytorch Github

Vector Jacobian Product Calculation Autograd Pytorch Forums

2 D Convolution As A Matrix Matrix Multiplication Stack Overflow

Is There An Function In Pytorch For Converting Convolutions To Fully Connected Networks Form Stack Overflow

Batch Matmul With Sparse Matrix Dense Vector Issue 14489 Pytorch Pytorch Github

Inner Dot Product Of Two Vectors Applications In Machine Learning

Left Tensor Notation Depicting A Scalar S Vector V I Matrix M Ij Download Scientific Diagram

Part 23 Orthonormal Vectors Orthogonal Matrices And Hadamard Matrix By Avnish Linear Algebra Medium

Linear Algebra For Deep Learning Introduction By Shrey Khanna Medium

Matrix Multiplication In Lesson 4 Part 1 2019 Deep Learning Course Forums

Why Is The Derivative Of F X With Respect To X X And Not 1 In Pytorch Stack Overflow