Torch Multiply Matrix By Vector

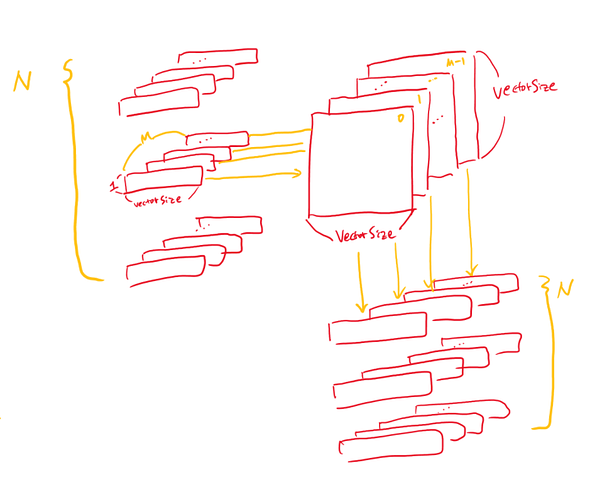

Tmp xbmmF_x_t batch_size x B x N. Arange 1 3 Size 2 M torch.

Tensor Operations In Pytorch Explained With Code By Sai Swaroop Medium

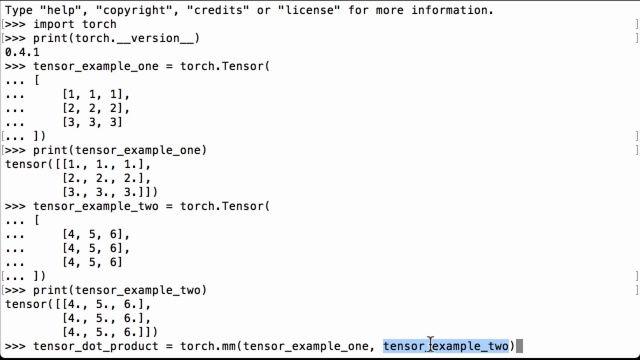

Do a batch multiplication of and by invoking the bmm method.

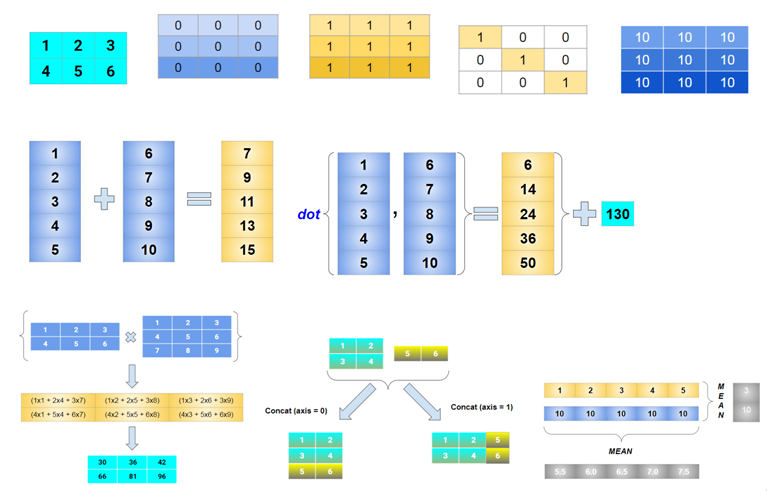

Torch multiply matrix by vector. This results in a num_embeddings num_embeddings matrix dot. So if A is an m n matrix then the product A x is defined for n 1 column vectors x. Multiplication of Matrices If X and Y are matrix and X has dimensions mn and Y have dimensions np then the product of X and Y has dimensions mp.

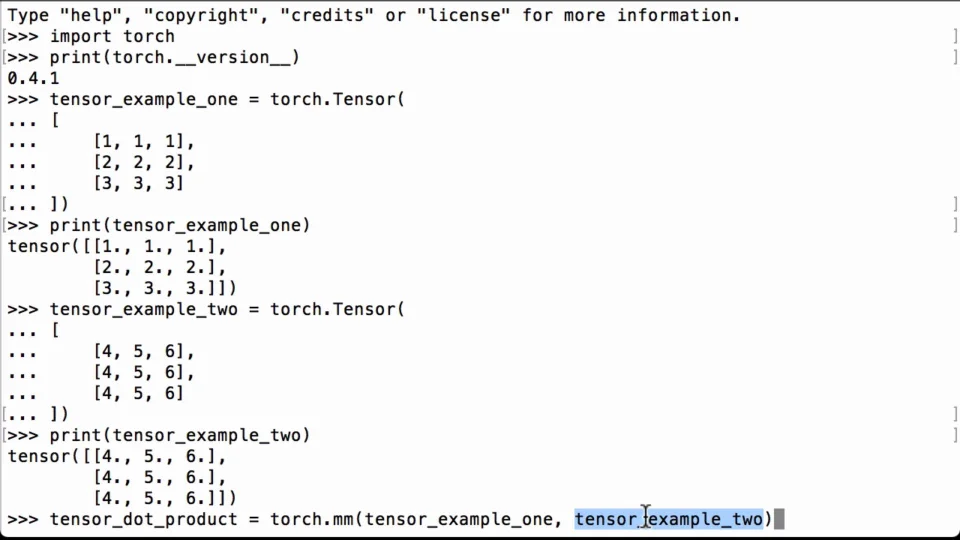

The matrix input is added to the final result. If you think about how matrix multiplication works multiply and then sum youll realize that each dotij now stores the dot product of Ei and Ej. Torchmv ab Note that for the future you may also find torchmatmul useful.

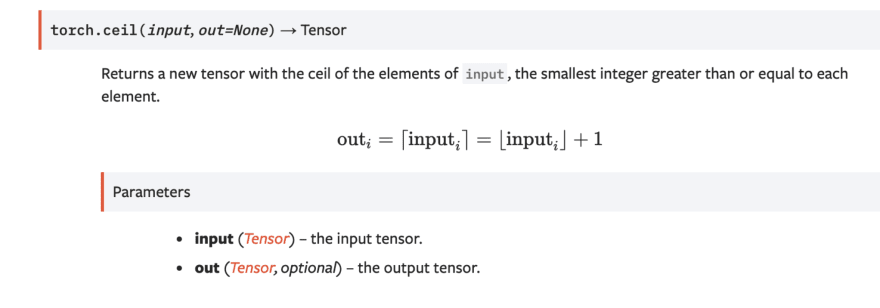

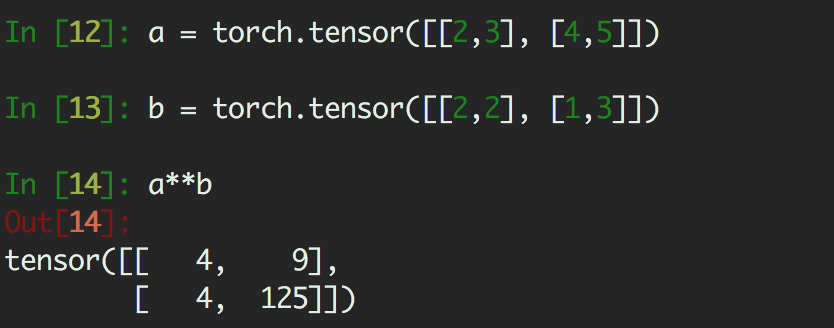

When comparing the outcomes of torchmm and torcheinsum for matrix multiplication the results is not consistent. This method allows the computation of multiplication of two vector matrices single-dimensional matrices 2D matrices and mixed ones also. Performs a matrix-vector multiplication between mat 2D Tensor and vec2 1D Tensor and add it to vec1.

When we calculate the gradient of a vector-valued function a function whose inputs and outputs are vectors we are essentially constructing a Jacobian matrix. Vector torch. Ger v1 v2 Add M with outer product of 2 vectors Size 3x2 vec1 torch.

Learn about PyTorchs features and capabilities. Arange 1 3 Size 2 r torch. Outer product of 2 vectors Size 3x2 v1 torch.

In other words res v1 vec1 v2 mat vec2. This method also supports broadcasting and batch operations. Arange 1 4 Size 3 v2 torch.

Join the PyTorch developer community to contribute learn and get your questions answered. The same result is also produced by numpy. Torchmatmul infers the dimensionality of your arguments and accordingly performs either dot products between vectors matrix-vector or vector-matrix multiplication matrix multiplication or batch matrix multiplication for higher order tensors.

Tensor 7 19 3 4. If the first argument is 2-dimensional and the second argument is 1-dimensional the matrix-vector product is returned. Import torch import numpy as np torchmanual_seed2 a torchrandn4 4 b torchrandn4 4 c_torch_mm torchmma b.

After the matrix multiply the prepended dimension is removed. This function does exact same thing as torchaddmm in the forward except that it supports backward for sparse matrix mat1. Optional values v1 and v2 are scalars that multiply vec1 and vec2 respectively.

To multiply a row vector by a column vector the row vector must have as many columns as the column vector has rows. Torchaddmm torchaddmminput mat1 mat2 beta1 alpha1 outNone Tensor Performs a matrix multiplication of the matrices mat1 and mat2. Thanks to the chain rule multiplying the Jacobian matrix of a function by a vector with the previously calculated gradients of a scalar function results in the gradients of the scalar output with respect to the vector-valued function.

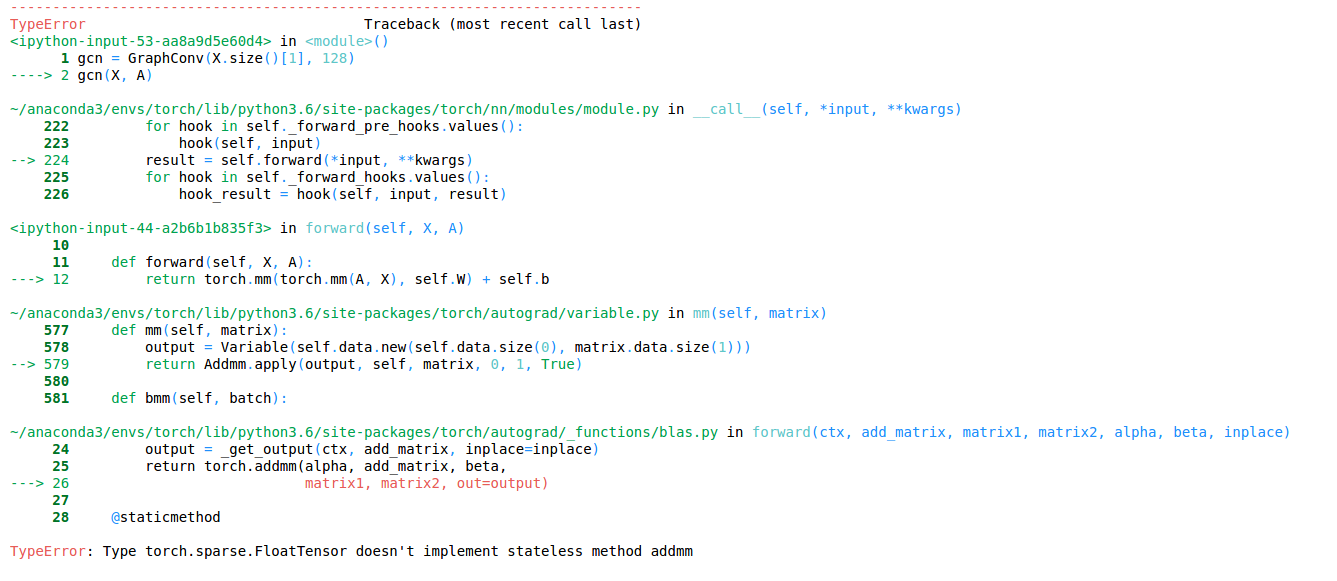

Arange 1 4 Size 3 vec2 torch. Now multiply by F_Y to give the write patch of shape batch_size x N x N times. Performs a matrix multiplication of the sparse matrix mat1 and the sparse or strided matrix mat2.

The entry XYij is obtained by multiplying row I of X by column j of Y which is done by multiplying corresponding entries together and then adding the results. A minimal example is down here. Zeros 3 2 r torch.

W gamma tmp. If the first argument is 1-dimensional and the second argument is 2-dimensional a 1 is prepended to its dimension for the purpose of the matrix multiply. If both arguments are at least 1-dimensional and at least one argument is N-dimensional where N 2 then a batched matrix multiply.

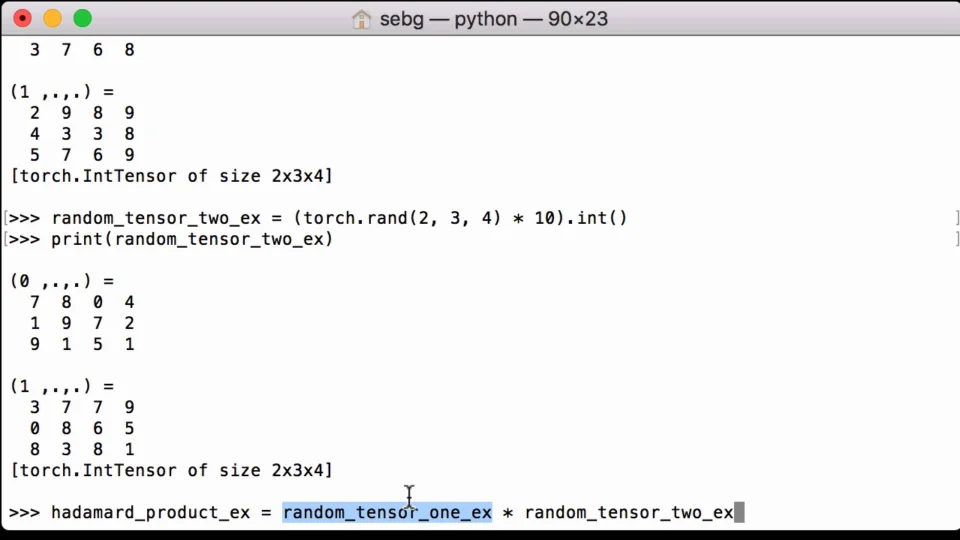

We also went through some common operations on tensors such as addition scaling dot products and matrix multiplication. Finally we quickly revised what differentiation and partial differentiation is and how it can be computed using PyTorch. The 2 denotes that we are computing the L-2 euclidean norm of each vector.

Let us define the multiplication between a matrix A and a vector x in which the number of columns in A equals the number of rows in x. This is an elementwise multiplication simply done by using the asterisk operator. If p happened to be 1 then B would be an n 1 column vector and wed be back to the matrix-vector product The product A B is an m p matrix which well call C ie A B C.

In math terms we say we can multiply an m n matrix A by an n p matrix B. Then we compute the magnitude of each embedding vector. Matrix multiplies a sparse tensor mat1 with a dense tensor mat2 then adds the sparse tensor input.

Depending upon the input matrices. Tmp F_ybmmtmp Multiply this by to get the glimpse vector.

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

How To Perform Basic Matrix Operations With Pytorch Tensor Dev Community

Basic Pytorch Operations Deep Learning With Pytorch Quick Start Guide

Sparse X Dense Dense Matrix Multiplication Pytorch Forums

Pytorch Element Wise Multiplication Pytorch Tutorial

Pytorch Matrix Multiplication How To Do A Pytorch Dot Product Pytorch Tutorial

Pytorch Batch Matrix Operation Pytorch Forums

Np Dot And Torch Dot Programmer Sought

Pytorch Matrix Multiplication Matmul Mm Programmer Sought

What Is Pytorch Think About Numpy But With Strong Gpu By Khuyen Tran Towards Data Science

How To Perform Sum Of Squares Of Matrices In Pytorch Pytorch Forums

Pytorch Basic Operation Programmer Sought

Matrix Product Of The Two Tensors Stack Overflow

How To Perform Basic Matrix Operations With Pytorch Tensor Dev Community

002 Pytorch Tensors The Main Data Structure Master Data Science

Pytorch Basic Operation Programmer Sought

Visual Representation Of Matrix And Vector Operations And Implementation In Numpy Torch And Tensor Towards Ai The Best Of Tech Science And Engineering

Batch Matrix Vector Multiplication Without For Loop Pytorch Forums