Invertible Matrix Linearly Dependent Columns

Then we can express A as. Choose the correct answer below.

The columns of A are linearly dependent because if the last column in B is denoted bp then the last column of AB can be rewritten as Abp0.

Invertible matrix linearly dependent columns. There is also a hint. If the columns of A are linearly dependent then at least one of the columns is a multiple of the other. Prove that square matrix is invertible if the columns of the matrix are linearly independent.

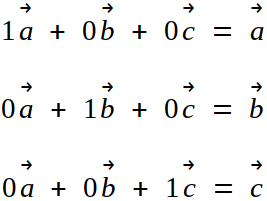

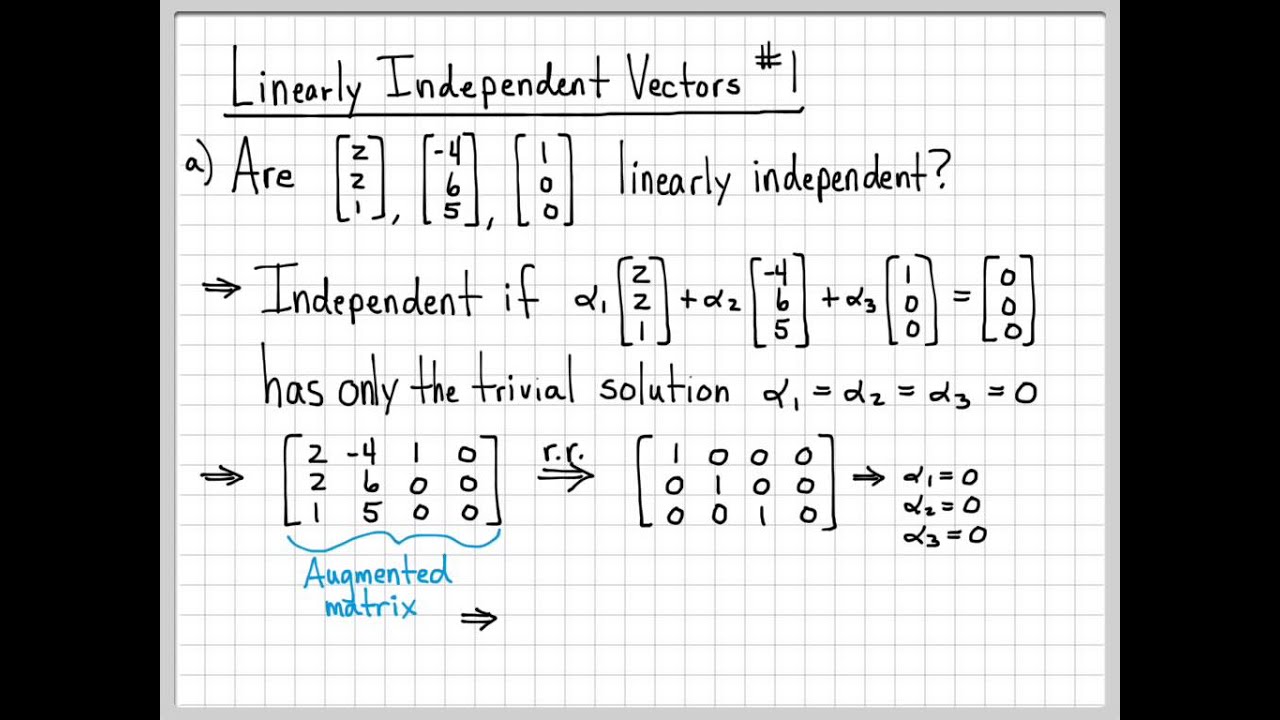

Example 4103 If A is an nn matrix such that the linear system AT x 0 has no nontrivial solution x then nullspaceAT 0 and thus AT is invertible by the equivalence of a. Saying theres a subset of linearly dependent columns means that there are columns c_i_1c_i_2c_i_k that are linearly dependent so that there exist nontrivial a_k such that. Say Ive got some matrix a its an N by K matrix lets say its not just any n by K matrix this matrix a has a bunch of columns that are all linearly independent so a1 a2 all the way through a K are linearly independent they are linearly independent columns let me write that down here so a1 a2 all the column vectors of a all the way through a K are linearly independent now what does that mean.

Is a 23 matrix invertible. You can help with the following statement. One way to characterize the linear dependence of the rows or columns if the rows are linearly dependent and the matrix is square then the columns are linearly dependent as well in three dimensions is that the volume of the parallelepiped formed by the rows or columns of M is zero.

Since x 0 is a solution of Ax 0 the columns of A must be linearly independent Explain why the columns of an nxn matrix A span R when A is invertible. Since bp is not all zeros then any solution to Abp0 can not be the trivial solution. According to the Invertible Matrix Theorem if a matrix is invertible its columns form a linearly dependent set.

Therefore the columns of A1 are linearly independent. According to the Invertible Matrix Theorem if a matrix is invertible its columns form a linearly independent set. Vectors a b are linearly dependent.

We see by inspection that the columns of A are linearly dependent since the first two columns are identical. When the determinant of a matrix is zero its rows are linearly dependent vectors and its columns are linearly dependent vectors. Let the multiplier be m.

When the columns of a matrix are linearly dependent then the columns of the inverse of that matrix are linearly independent. Displaystyle mathbf A -1frac 1detmathbf A beginbmatrixmathbf x_1 times mathbf x_2 mathrm T mathbf x_2 times mathbf x_0 mathrm T mathbf x_0 times mathbf x_1 mathrm T. If the determinant of a square matrix nn A is zero then A is not invertible.

The determinant of A is amb bma 0. If so the matrix M does not have an inverse. If a matrix A is not square then either the row vectors or the column vectors of A are linearly dependent.

Then A v 0 where v a 1 a n 0 so A is not invertible since otherwise multiplying by A 1 would give a contradiction. U-V is bijection when a vector space basis N for U its picture - i dont know the right english word L N is a basis for vector space V. If the columns of A are linearly dependent then a 1 c 1 a n c n 0 for some scalars a 1 a n not all 0.

A Either the row vectors or the column vectors of a square matrix are linearly independent. Facts about linear independence. A ma b mb.

A wide matrix a matrix with more columns than rows has linearly dependent columns. For example four vectors in R 3 are automatically linearly dependent. According to the Invertible Matrix Theorem if a matrix is invertible its columns form a linearly dependent set.

True-False Exercises In parts aj determine whether the statement is true or false and justify your answer. This is not what we want since the vectors trace the same line. If A is invertible then for all x there is a b such that Ax b.

Note that a tall matrix may or may not have linearly independent columns. Therefore the columns of A are linearly independent. Therefore by the equivalence of j and n in the Invertible Matrix Theorem the rows of A do not span R4.

Since Ax 0 has only the trivial solution the columns of A must be linearly independent. Let your matrix be A and let the columns of your matrix be c_1c_2cdotsc_n. Therefore the columns of A are linearly independent.

A 4 2 b 2 1 a 2 b 2 2 1 4 2 Also the slopes of both vectors are the same which is a necessary condition to make them dependent. If C is 66 matrix and the equation Cxv is consistent for every v in set of real numbers ℝ6 is it possible that for some v the equation Cxv has more than one solution. - 1 Get more help from Chegg.

If a matrix consisting of three column vectors and is invertible its inverse is given by A 1 1 det A x 1 x 2 T x 2 x 0 T x 0 x 1 T. When the columns of a matrix are linearly dependent then the columns of the inverse of that matrix are linearly independent.

Linear Algebra Example Problems Linearly Independent Vectors 1 Youtube

Http Www Cse Iitm Ac In Vplab Courses Larp 2018 Vector Space 2 3 Pdf

Linear Algebra Example Problems Onto Linear Transformations Youtube

Part 8 Linear Independence Rank Of Matrix And Span By Avnish Linear Algebra Medium

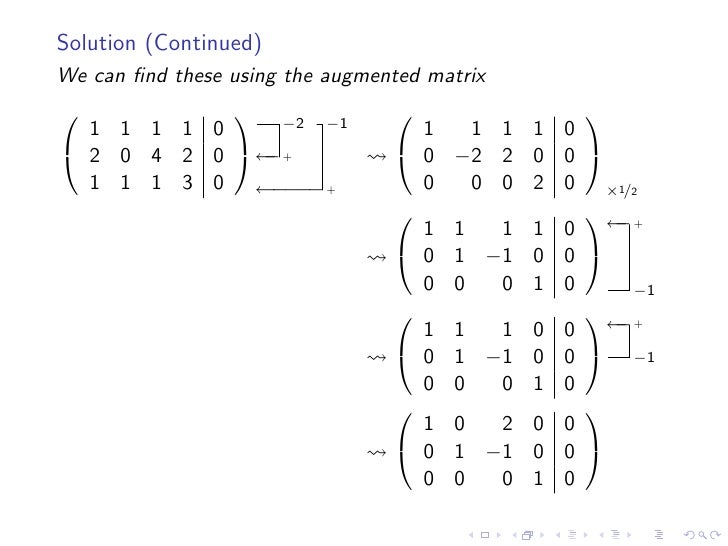

Section 1 7 Linear Independence And Nonsingular Matrices Ppt Video Online Download

Linear Algebra Example Problems Linearly Independent Vectors 1 Youtube

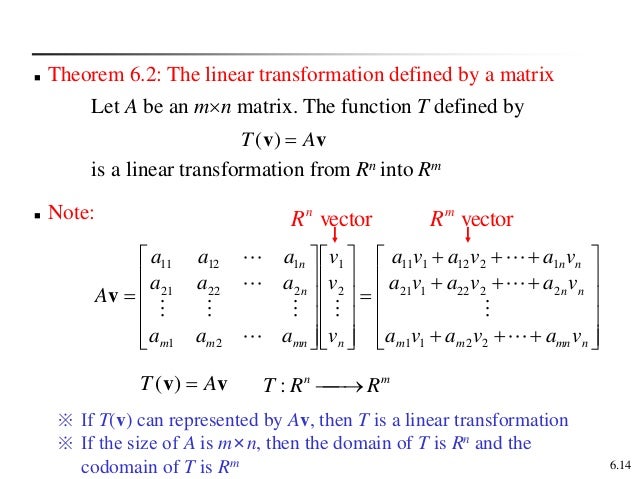

Linear Transformations And Matrices

How Do I Use The Determinant Of A Matrix To Show That Some Vectors Are Linearly Independent Mathematics Stack Exchange

Full Rank Design Matrix From Overdetermined Linear Model Cross Validated

Linear Equations In Linear Algebra Ppt Video Online Download

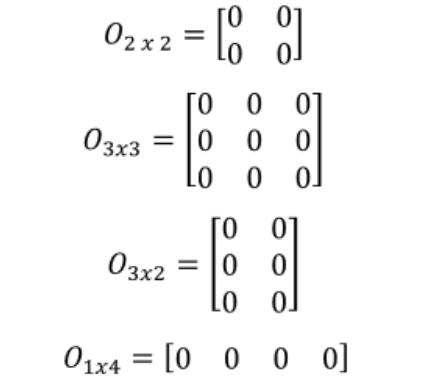

Definition Of A Zero Matrix Studypug

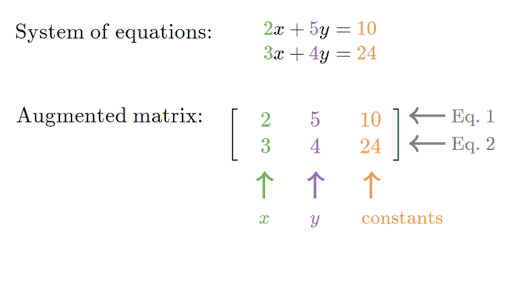

Representing Linear Systems With Matrices Article Khan Academy

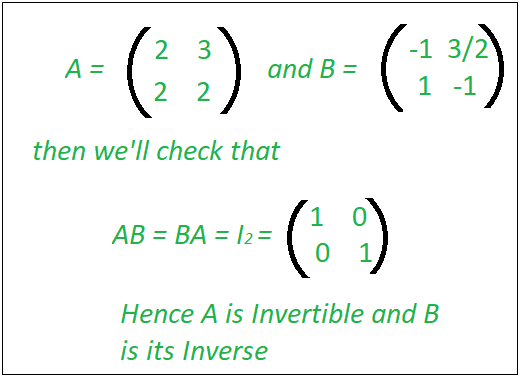

Check If A Matrix Is Invertible Geeksforgeeks

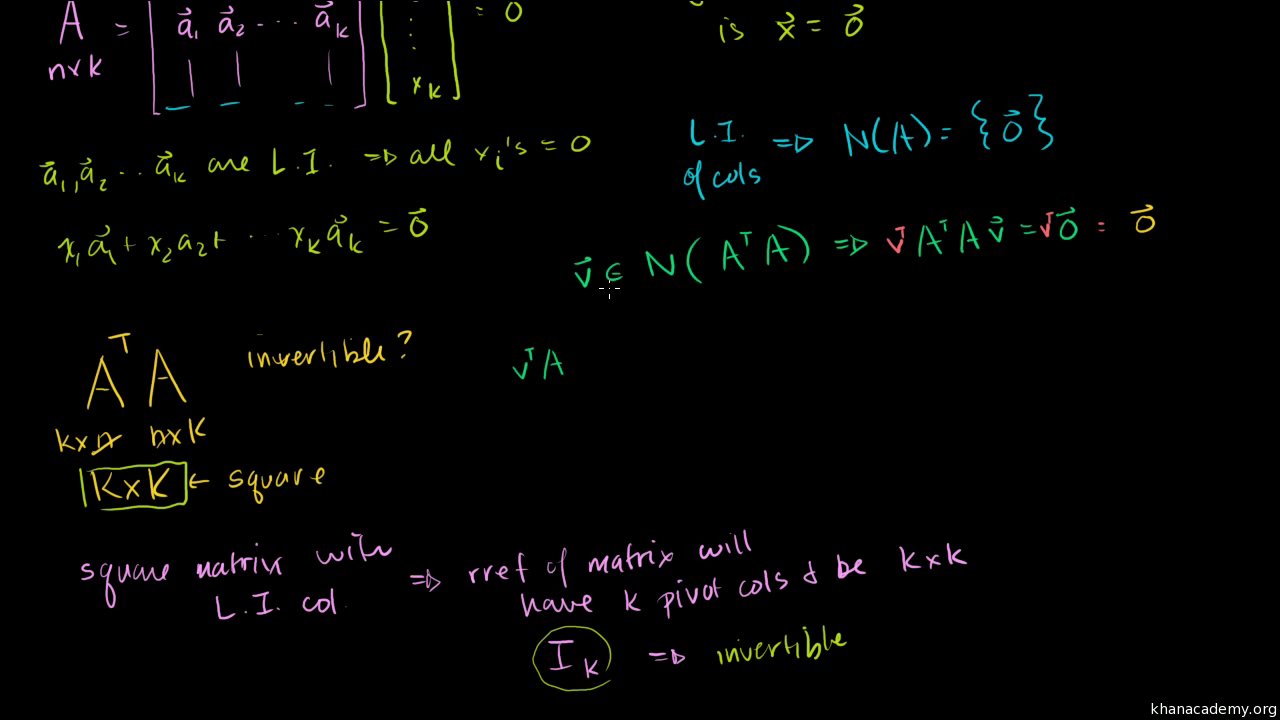

Showing That A Transpose X A Is Invertible Video Khan Academy

Showing That A Transpose X A Is Invertible Video Khan Academy